VLMs, LLMs, Agents & RAG

Dental Chatbot - AI Appointment Booking System

AI-powered dental appointment booking system using Claude AI and LangChain. Natural language chatbot with Next.js frontend, Node.js backend, and FastAPI microservice. Handles complex date parsing and conversational booking.

Tools and Features:

- FastAPI & Node.js

- RAG, LangChain & Vector-Search

- Next.js

- PostgreSQL

- Anthropic Claude API

Watch Demo Video

TechMart AI Assistant

A sophisticated enterprise-grade RAG (Retrieval-Augmented Generation) customer service system for electronics retail. Combines structured database queries with vector-based document retrieval to deliver intelligent, contextual responses in both Arabic and English with real-time streaming capabilities. Features hybrid architecture that intelligently merges PostgreSQL data with Pinecone vector search for comprehensive responses.

Tools and Features:

- FastAPI & Vue.js 3

- OpenAI GPT-4 & Embeddings

- Pinecone Vector Database

- PostgreSQL & Redis

- RAG, LangChain & Vector-Search

- Multilingual Support (Arabic/English)

- Docker & CI/CD

Live AI Vision - Real-Time Camera Captioning

A professional real-time computer vision application that generates intelligent captions for live webcam feeds using Azure Computer Vision API. Features deployment pipelines, rate limiting, and cost optimization. This project also presented a significant challenge: operate the application as efficiently and cost-effectively as possible in a live environment. I explored various approaches to strike the best balance between performance and resource consumption.

Tools and Features:

- Azure Computer Vision API

- Real-time Processing

- FastAPI

- Rate Limiting

- Vercel

Watch Demo Video

Voice-TimeLogger-Agent

An AI-powered system that automates consultant work hours tracking using voice messages. Consultants can record a quick voice message after each meeting, and the system automatically extracts key details (customer name, start/end times, total hours, and notes) and stores them in Google Sheets.

Tools and Features:

- FastAPI

- OpenAI Whisper

- GPT Models

- Google Sheets API

- n8n Workflow Automation

- Docker

Wikipedia RAG Chatbot

A Streamlit-based application that leverages Retrieval Augmented Generation (RAG) to provide accurate answers from Wikipedia content. Users can input text directly or provide a Wikipedia URL, process the document to create embeddings and a vector store, and ask questions to receive AI-generated answers based on the relevant information.

Tools and Features:

- Streamlit

- LangChain

- OpenAI

- FAISS Vector Store

- Wikipedia API

Fine-tuning Projects

RoBERTa-base Plant NER

A custom RoBERTa-based NER model for vegetable and fruit taxonomy identification. This project utilizes a custom taxonomy for identifying fruits and vegetables. It is based on the RoBERTa transformer architecture and has been fine-tuned to recognize fruit and vegetable entities within text. The model is available on the Hugging Face model hub.

Tools and Features:

- Named Entity Recognition (NER)

- Spacy

- RoBERTa transformer

- Hugging Face

20 Newsgroups Classification, Question Answering, and Summarization with BERT, BART, and RoBERTa

This repository provides a Flask web application that harnesses the capabilities of BERT, BART, and RoBERTa models for NLP tasks on the 20 Newsgroups dataset. The application classifies articles, generates concise summaries, and answers user-posed questions.

Tools and Features:

- BERT

- RoBERTa

- Hugging Face

- Flask

- Summarization

- Question Answering

LLAMA-2-7B-MiniGuanaco

LLAMA-2-7B-MiniGuanaco model fine-tuned on the Guanaco dataset with 3k rows using 4-bit quantization type (nf4).

Tools and Features:

- LLAMA-2-7B

- MiniGuanaco Dataset

- 4-bit Quantization

- Bits and Bytes

OpenHermes-2.5-Mistral-7B-Orca-DPO

This project involves training the OpenHermes-2.5-Mistral-7B model with the DPO technique using Bits and Bytes library for optimization.

Tools and Features:

- Mistral-7B

- Orca-DPO

- Bits and Bytes

DistilBERT-MRPC-Lightning-DeepSpeed

This project uses DistilBERT fine-tuned for the MRPC task using PyTorch Lightning and DeepSpeed for optimized performance and scalability.

Tools and Features:

- DistilBERT

- MRPC Task

- PyTorch Lightning

- DeepSpeed

Falcon-7B-Instruct-TruthfulQA

This model is a fine-tuned version of the `tiiuae/falcon-7b-instruct` using the QLoRA technique on the TruthfulQA dataset.

Tools and Features:

- tiiuae/falcon-7b-instruct

- TruthfulQA Dataset

- QLoRA

- H100 GPUs

Megatron-GPT2-Classification

The Megatron-GPT2-Classification model is a language model trained using Megatron and Accelerate frameworks. It has been fine-tuned for classification tasks and benefits from distributed training across 4 GPUs (RTX 4070).

Tools and Features:

- Megatron

- Accelerate

- Distributed Training

- 4 GPUs (RTX 4070)

Mistral-NeuralHermes-Merge-7B-slerp

The Mistral-Merge-7B-slerp is a merged model leveraging the spherical linear interpolation (SLERP) technique to blend layers from two distinct transformer-based models.

Tools and Features:

- SLERP Technique

- MergeKit

- Transformer-Based Models

- Mistral-7B

- NeuralHermes-2.5

Machine Learning & Deep Learning Projects

ML AutoTrainer Engine

ML AutoTrainer Engine, developed using Streamlit, is an advanced app designed to automate the machine learning workflow. It provides a user-friendly platform for data processing, model training, and prediction, enabling a seamless, code-free interaction for machine learning tasks.

Tools and Features:

- Streamlit

- AutoML

- Web App

- Python

- Machine Learning Pipelines

Churn Prediction End-to-End Machine Learning Pipeline

This project is an end-to-end machine learning pipeline with a focus on efficient model deployment using Flask API, Docker, and Amazon EC2. The modular architecture ensures seamless integration and a consistent experience across environments. A CI/CD pipeline with GitHub Actions streamlines development and deployment.

Tools and Features:

- Docker

- Flask

- XGBoost

- AWS-EC2

- CI/CD

Diabetes Progression Predictor

This project exemplifies a robust ML workflow, leveraging MLflow for experiment tracking, Docker for containerization, TensorFlow Serving for model deployment, and GitHub Actions for CI/CD. It embodies a comprehensive system designed to predict diabetes progression using advanced machine learning paradigms.

Tools and Features:

- Docker

- MLflow

- TensorFlow Serving

- MLOps

- CI/CD

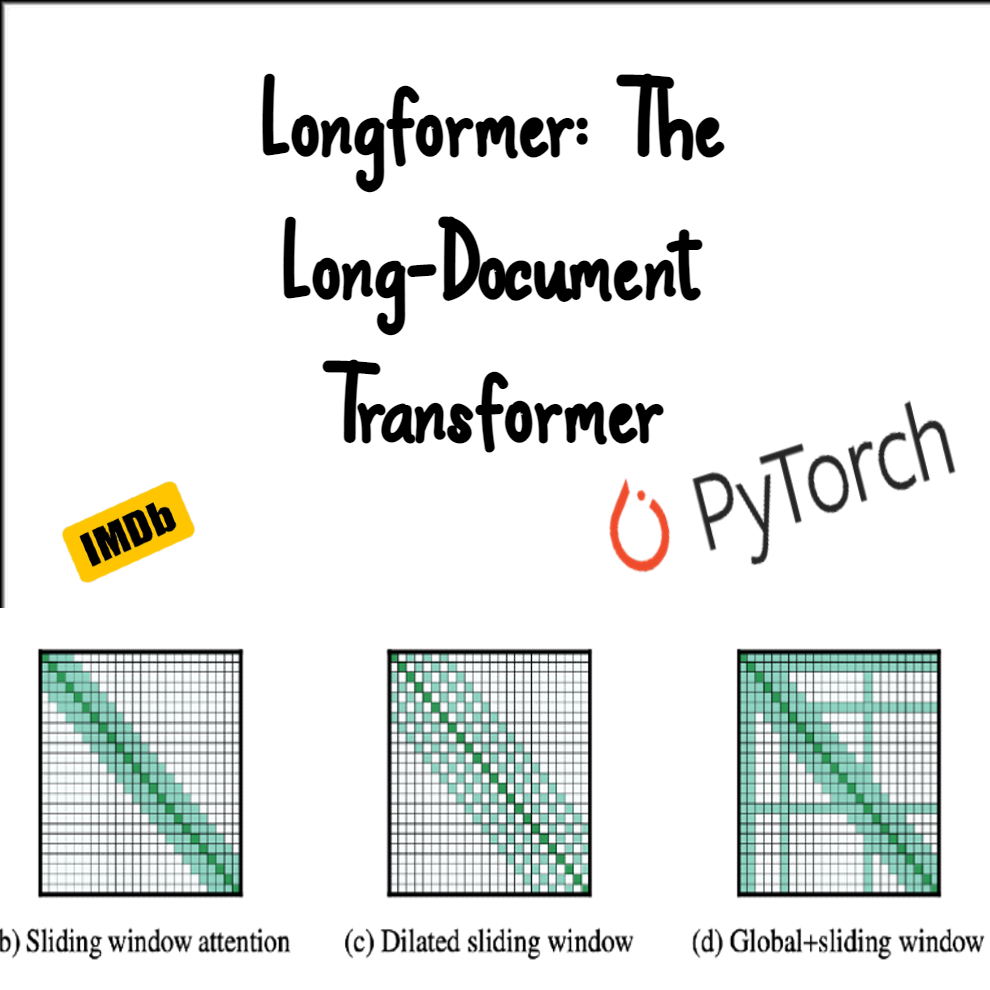

Longformer Learning: Next Generation Sentiment Analysis

This project applies the Longformer model to sentiment analysis using the IMDB movie review dataset. The Longformer model, introduced in "Longformer: The Long-Document Transformer," tackles long document processing with sliding-window and global attention mechanisms.

Tools and Features:

- PyTorch

- Transformers

- Attention

- Python

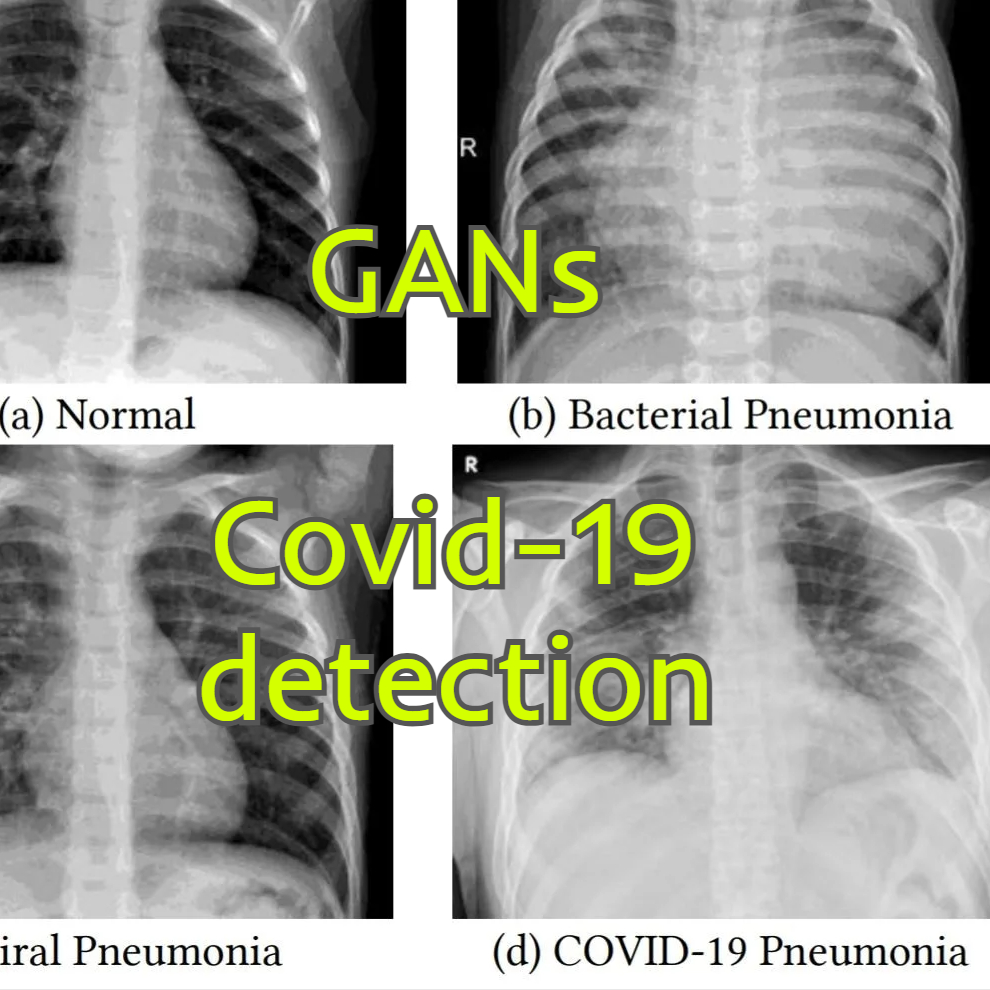

ChestXGAN: Generating Synthetic Chest X-Ray Images using GANs for Covid-19 Detection

ChestXGAN is a deep learning project that uses Generative Adversarial Networks (GANs) to generate synthetic chest X-ray images and detect COVID-19 from them. By using GANs, ChestXGAN can produce realistic-looking chest X-ray images that can help in the training and evaluation of machine learning models for detecting COVID-19.

Tools and Features:

- TensorFlow

- GANs

- ResNet-50

- Python

IrisFlow: MLOps Project with Flask, Docker, CI/CD, and Kubernetes

An end-to-end MLOps project integrating Flask, Docker, CI/CD (GitHub Actions), and Kubernetes. This repo demonstrates the development, containerization, automated deployment, and scaling of a simple ML model for iris classification.

Tools and Features:

- Docker

- Kubernetes

- Flask

- MLOps

- CI/CD

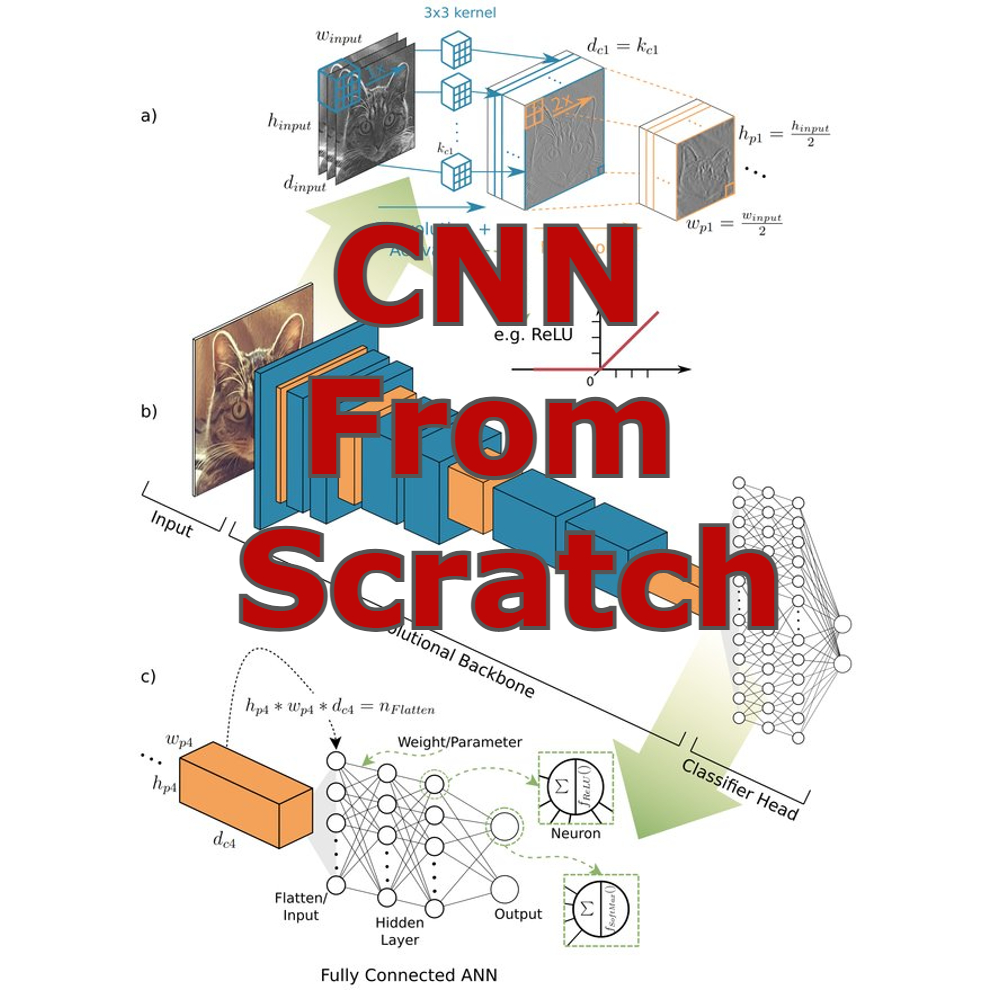

MathConvNet: Mathematical Convolutional Neural Network Implementation from Scratch

This project is a fundamental and mathematical implementation of a Convolutional Neural Network (CNN) from scratch using NumPy. It encompasses all core components of a standard neural network, including forward and backward propagation, convolutional and pooling layers, fully connected layers, and activation functions. The implementation uses the principles of linear algebra, calculus, and statistics, providing a deep insight into the underpinning mechanics of Deep learning models.

Tools and Features:

- Deep Learning

- NumPy

- ConvNet

- Python

Amazon Review NLP: Sentiment Analysis for Customer Reviews

AmazonReviewNLP is a deep learning project that employs Bidirectional Long Short-Term Memory (LSTM) neural networks, a powerful form of Recurrent Neural Networks (RNNs), for advanced sentiment analysis of Amazon customer reviews.

Tools and Features:

- TensorFlow

- Bidirectional LSTM

- Python

Misc Projects

TrustCheck - Real-Time Sanctions Compliance Platform

An enterprise-grade, cloud-native sanctions compliance platform offering real-time monitoring, intelligent change detection, and automated alerting. Built with a modern Python stack (FastAPI, Celery, SQLAlchemy) and deployed on AWS using Terraform for complete Infrastructure as Code (IaC) automation.

Tools and Features:

- Terraform

- AWS

- FastAPI

- Celery

- Docker

- Redis

- github-actions

Doctor Who Web APIs

This project is a .NET 7 Web API application that serves as a backend for managing Doctor Who related data. It supports CRUD operations for doctors, episodes, and authors, as well as adding companions and enemies to episodes. The application is built using Entity Framework Core for data access, AutoMapper for object mapping, and FluentValidation.

Tools and Features:

- C#

- ASP.NET

- Web APIs

- Entity Framework (EF) Core

Doctor Who Database Project

The Doctor Who project is a database project based on the British science fiction television program. The purpose of the project is to create a database that contains information about the Doctor Who universe, including data on episodes, doctors, companions, and enemies.

Tools and Features:

- Transact-SQL

- SQL Server